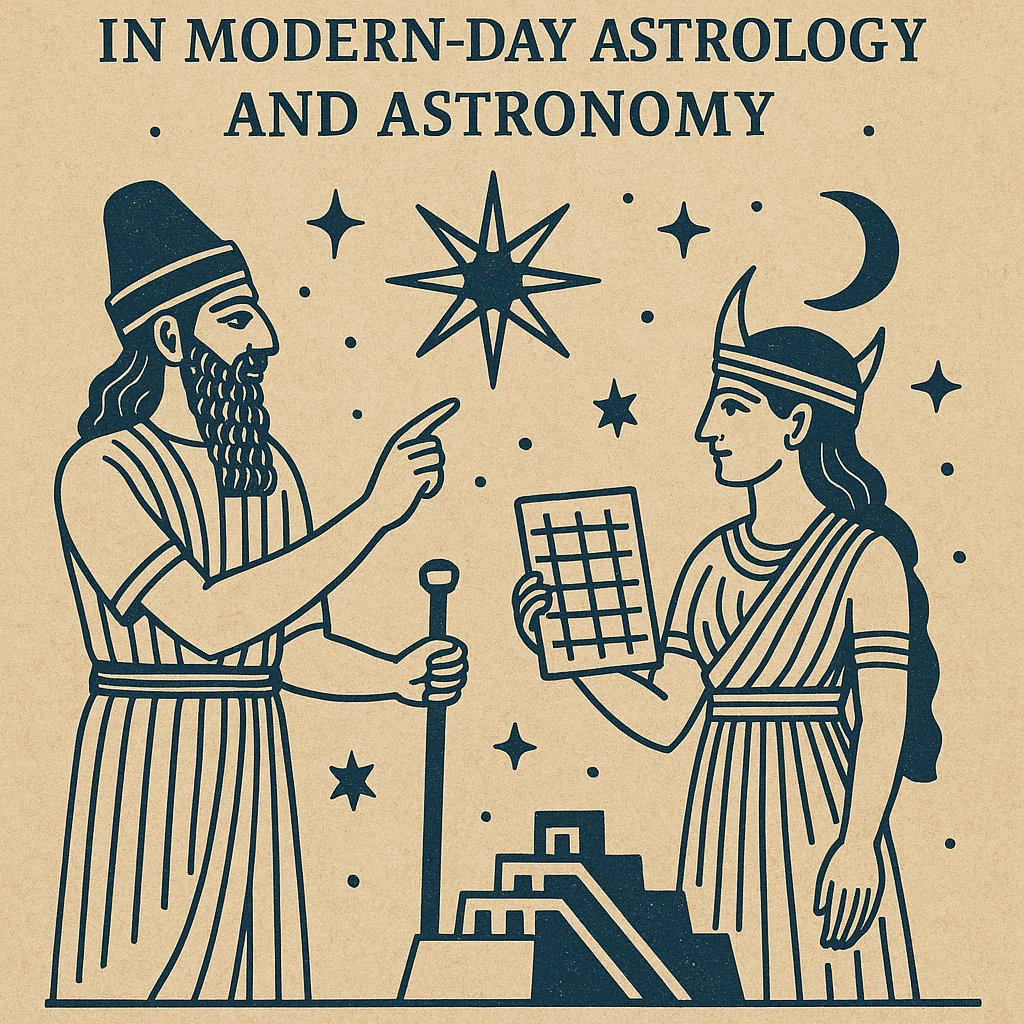

Artificial intelligence has reshaped how we create and consume media, and video generation is one of the fastest-moving frontiers. What once required cameras, sets, and actors can now be simulated using algorithms that transform a static photo and an audio file into a moving, expressive video. The ambition goes beyond simple lip-syncing: researchers are working toward generating natural facial expressions, hand movements, and full-body gestures that align with speech and emotion.

The promise is huge—content creators, educators, marketers, and filmmakers could one day generate realistic videos in minutes, without expensive equipment or studios. Yet the technology is still uneven. While academic teams are demonstrating full-body motion synthesis, most tools available to the public remain limited to talking-head videos. This gap between research and everyday usability is where curiosity often turns into confusion: people hear about breakthroughs, but when they go looking for a free, accessible model, the options are far narrower.

This article explores the current landscape—what’s happening in research labs, what free tools exist today, and why truly free, full-body video generation remains just out of reach.

Current Research Models

The most exciting progress is coming from research labs, where teams are experimenting with new architectures to combine speech, image, and motion into coherent video outputs. Each model approaches the challenge differently, and most are still resource-heavy or require technical expertise to run.

- OmniHuman-1

Developed by a ByteDance research group, OmniHuman-1 is one of the most advanced systems in this field. It can take a single image and an audio clip, then generate a full-body performance with synchronized lip movement, facial expression, and gestures. Unlike earlier systems limited to upper-body animation, OmniHuman-1 integrates pose estimation and body dynamics to make the subject appear more lifelike. The tradeoff is accessibility: while demo results are impressive, the system is not packaged for everyday creators and still sits primarily in the research domain. - SPACE (Speech-driven Portrait Animation with Controllable Expression)

SPACE narrows its focus to the head and shoulders but pushes for precision and realism. It allows fine-grained control over facial expressions and head pose, making it useful for scenarios like digital presenters or expressive avatars. While it doesn’t extend to full-body motion, the model demonstrates how nuanced expression control can make AI-generated characters more believable. - EMAGE (Expressive Motion from Audio and Gesture Estimation)

EMAGE stands out because it explicitly tackles full-body gesture generation. By combining audio features with structured mesh representations like SMPL-X (for body) and FLAME (for face), it produces coherent movements that match speech rhythm and intonation. The system is designed more for motion synthesis pipelines—where avatars are rigged with meshes—than for turnkey video tools, but it marks a clear step toward bridging speech and natural full-body motion. - AD-NeRF (Audio-Driven Neural Radiance Fields)

This model takes a novel approach using NeRFs (Neural Radiance Fields) to synthesize realistic talking heads and partial upper-body motion. The outputs often capture fine detail and realism, especially in facial regions. However, NeRF-based systems are computationally heavy and not yet practical for casual use. They also remain limited to upper-body animation rather than full-body video.

Collectively, these models highlight a trend: while face and upper-body animation are already accessible, extending that realism to the full body is far more complex. The research is advancing quickly, but the gap between prototype and user-ready tool is still wide.

Tools Available to the Public

While research models showcase the cutting edge, most people are more interested in tools they can use today without needing a GPU cluster or coding skills. Public-facing platforms do exist, but their capabilities are more modest compared to research demos. Most services emphasize accessibility over realism, which means they often stop at talking heads or avatar-style videos.

- HeyGen

HeyGen is a popular online tool that allows users to animate avatars with text or audio. It offers expressive talking heads and upper-body avatars designed for marketing videos, tutorials, or social content. The free trial is limited in length and features, but it gives a taste of AI-driven video without requiring technical knowledge. While engaging, HeyGen’s avatars tend to look polished but slightly robotic compared to research-grade outputs. - Synthesia

Synthesia focuses on professional-looking avatars that can deliver scripted speech in multiple languages. Unlike HeyGen, it offers some full-body avatars, though they are more presentation-style than natural, free-flowing humans. The platform is geared toward corporate training, marketing, and education. Free access is restricted to short demo videos, with most features locked behind paid plans. - Vozo.ai

Vozo.ai leans into talking-photo animations, where a still portrait can be brought to life with lip-sync and simple expressions. It’s less about full-body performance and more about giving static images a sense of presence. This makes it suitable for personalized messages or creative experiments but not for realistic, expressive character generation. - NeuralFrames

NeuralFrames provides tools for generating audio-reactive visuals and stylized animations. While not full-body or hyper-realistic, it shows how AI can create engaging media experiences without heavy technical setup. It is closer to artistic expression than photorealistic video. - Hugging Face Spaces

For those curious about research without diving into raw code, Hugging Face Spaces is an excellent playground. Developers host demos of experimental models where users can try uploading images and audio to see animated results. Most of these projects focus on head and face animation rather than full-body motion. Still, platforms like Hugging Face are often the first place where cutting-edge academic models—such as EMAGE or other gesture-driven systems—get shared in semi-accessible form.

Together, these tools reflect the current trade-off: accessibility versus realism. Public platforms make AI video creation easy to try, but they rarely deliver the depth and authenticity of body language demonstrated in research. For creators, they can be useful stepping stones while waiting for more advanced models to filter down into user-friendly apps.

Conclusion

The ability to turn a single photo and an audio clip into a convincing video of a person speaking, moving, and gesturing is no longer a distant dream—it is a reality demonstrated in labs around the world. Research models like OmniHuman-1 and EMAGE show that full-body generation is technically possible, even if the systems remain complex and resource-heavy. They prove that AI can go beyond lip-syncing and subtle facial animations to capture the rhythm of speech in hand movements, posture, and body language.

Yet when the lens shifts from research to public use, the picture changes. Everyday creators currently rely on commercial platforms such as HeyGen or Synthesia, which simplify the process but deliver more constrained results—polished talking heads or corporate-style avatars rather than expressive full-body performances. Experimental demos on platforms like Hugging Face Spaces provide glimpses of what’s possible, but they are still prototypes.

This gap between breakthrough research and accessible tools is where the field now stands. The technology is advancing at an extraordinary pace, and what is limited today could become commonplace within a few years. For now, anyone eager to explore AI video generation must choose between experimenting with early research code or working within the boundaries of commercial trial platforms.

The trajectory is clear: as models get more efficient and easier to deploy, full-body expressive video generation will move from niche experiments to everyday creative tools. When that shift happens, the way we produce content—marketing, education, entertainment, and even personal communication—will transform dramatically.