How the tools of modern biology moved from elite labs to the wider world — and why that matters

When DARPA or a university lab invented a shiny capability thirty years ago, it came wrapped in racks of expensive hardware, security guards, and bureaucratic approvals. Today, roughly the same set of capabilities — the ability to read DNA, write small stretches of DNA, edit genes with CRISPR, or build useful biological tools using viral vectors — can be prototyped with devices and services that are faster, cheaper, and far more widely available.

That sentence is easy to misread as cheerleading for progress. It is not. It is a landscape description. The same forces that make lifesaving vaccines, rapid diagnostics, and climate-helpful microbes possible also reduce the barriers that once confined risk to a few institutional sites. Power has a new topology: flatter, more networked, and much harder to control.

Below I unpack what’s changed, why it’s dangerous if we ignore it, and what the prudent public should demand from scientists, companies, and governments.

What “spreading power” looks like in practice

Think in three linked moves:

- Tools become cheaper. Hardware that once cost hundreds of thousands of dollars now has lower-cost equivalents. Software that once required specialized engineers is increasingly user-friendly.

- Services outsource risk. You can today sequence DNA or order synthesized genetic material through commercial providers across the world. That convenience accelerates research but also creates many diffuse points of entry into biological systems.

- Communities spread knowledge. Open-access papers, online tutorials, hobbyist biology groups, and community labs make basic biotech know-how intelligible to thousands of curious people rather than a few credentialed specialists.

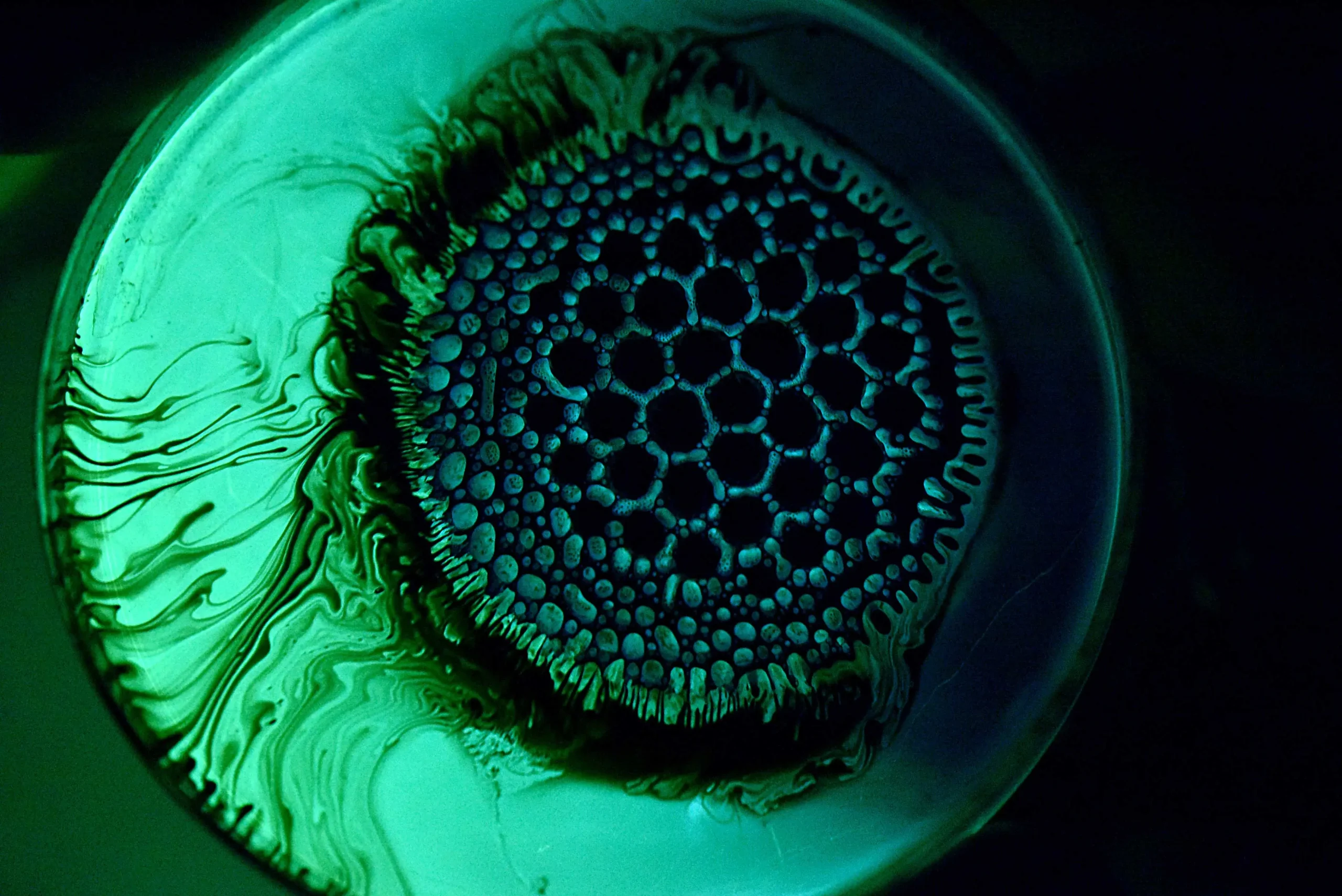

Put together: the technical and institutional moat that separated benign biotechnology from high-risk experiments is eroding.

Why this is more worrying than it sounds

The concern is not that “bad actors” will immediately create movie-style super-bugs. The concern is both more prosaic and more systemic:

- More actors, more failure modes. With many more hands on complex systems, the probability of accidental harm rises. Biology is sloppy: mistakes cascade.

- Faster cascades. Biological processes — like epidemics — can amplify exponentially. A small, localized problem can become global quickly.

- Dual-use ambiguity. The same technique that improves crop yield can, in a different project and different hands, be used to alter a pathogen in dangerous ways. Distinguishing benign from risky intent is not always straightforward.

- Gaps in governance. Laws, norms, and inspection systems were designed for an older world. They are siloed, slow, and uneven across countries. While technologists sprint, institutions lag.

- Unequal global preparedness. Wealthy countries concentrate sequencing labs and rapid response capacity. A biological event in a low-capacity region can fester and then spread — an unfair distribution of vulnerability.

So the threat is not a single dramatic act but an aggregate risk: many small failures, some malicious attempts, and governance blind spots combining into a systemic hazard.

Real examples

You don’t need a sci-fi thriller to see the problem. Consider how quickly COVID-19 spread before many countries recognized the scale of the threat; consider also how fast genomic tools were mobilized to identify the virus and build vaccines. That same duality — speed to harm and speed to remedy — characterizes present biology.

Similarly, small community laboratories have done wonderful, lawful citizen science. But when experiments with high-consequence potential occur outside rigorous oversight, even well-intentioned projects can create exposure routes that are hard to trace or contain.

Why we’re not treating this like the systemic risk it is

- Out of sight, out of mind. Threats that are technical, slow-burn, and hard to dramatize fail to mobilize voters and politicians until crisis hits.

- Economic incentives. Biotech is big business. Companies push for innovation speed and regulatory flexibility. That can slow the political appetite for stringent guardrails.

- Sovereignty friction. Effective global governance would require cross-border sharing, inspections, and capacity building — politically sensitive asks for sovereign states.

- Expertise mismatch. Policymakers often lack the deep, current technical literacy to weigh nuanced trade-offs. That gives an advantage to those who urge caution — who are often outspent by those urging speed.

What responsible action looks like (high level)

We must act like a civilization that understands how systemic risks work: we limit probability, reduce vulnerability, and build resilient response capacity. Importantly, these are governance and social choices, not impossible technical bans.

1. Surveillance that’s pathogen-agnostic

Invest in systems that spot unusual biological signals quickly — wastewater monitoring, genomic surveillance networks, and syndromic monitoring — and ensure they are global, not just concentrated in wealthy countries.

2. Transparent, proportionate norms for risky research

Require pre-funding, peer-reviewed risk assessments for high-consequence work (not secret edicts). Use independent committees drawn from science, ethics, and public health to evaluate projects.

3. Responsible commercial practices

Encourage DNA synthesis companies, commercial sequencing providers, and online reagent suppliers to adopt robust risk-screening and reporting standards — industry codes that are audited externally.

4. Build global capacity fast

We must elevate lab safety and pathogen detection everywhere. Donors and multilateral banks should prioritize biosafety capacity in low- and middle-income countries as seriously as they do pandemic preparedness financing.

5. Red-teaming and stress tests

Fund independent, ethical “red teams” and scenario exercises that probe vulnerabilities without publishing sensitive technical findings. The objective is to reveal governance gaps, not to teach harm.

6. Public literacy and civic oversight

Citizens need accessible explanations of both benefits and risks. Democracies should empower civil society and journalists to scrutinize biotech developments constructively.

What different audiences can pressure for

- Policymakers: Create fast, flexible regulatory mechanisms that match the pace of innovation — and fund global surveillance and response.

- Funders: Tie grants for advanced biological work to demonstrable safety and transparency commitments.

- Industry: Adopt independent audits and public reporting on safety practices; form cross-industry coalitions to raise norms.

- Scientists: Lead on norms and refuse to silo risk assessment as a bureaucratic afterthought.

- Public & media: Demand clarity — ask how new biotech projects are governed and who is accountable.

A final, plainspoken thought

Technological power is not neutral. Democracy, safety and economic benefit are not inevitable consequences of innovation; they are political outcomes shaped by policy, by institutions, and by public will. We can treat the democratization of biological capability as a civilizational dividend — bringing health and opportunity to billions — or we can treat it as a latent catastrophe waiting for a trigger.

Choosing the first option takes effort: funding, international cooperation, honest public debate and sensible regulation. It’s tedious, contested, and expensive — and that is exactly the point. The real danger in our era is not only the bad things that can happen; it’s our collective reluctance to do the boring, difficult work that prevents them.