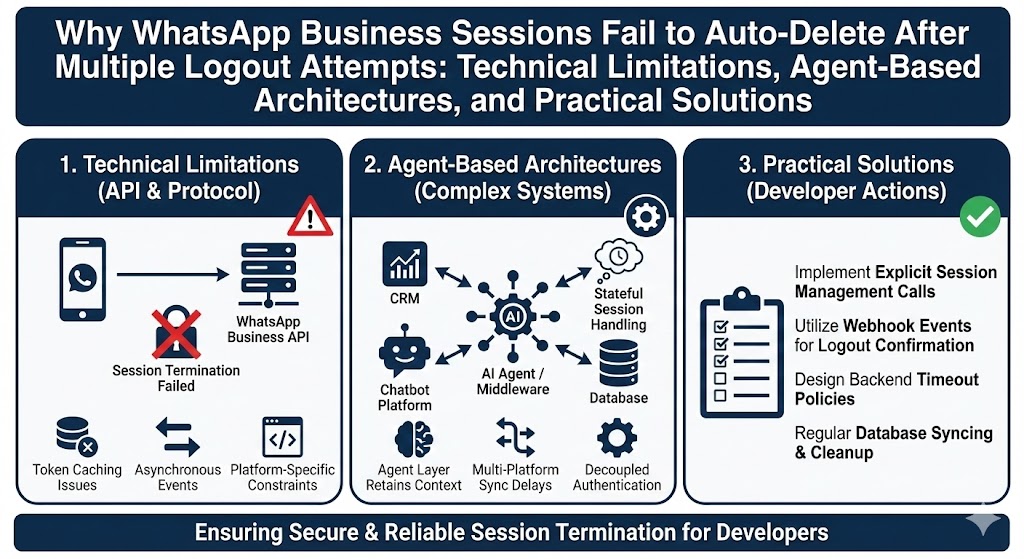

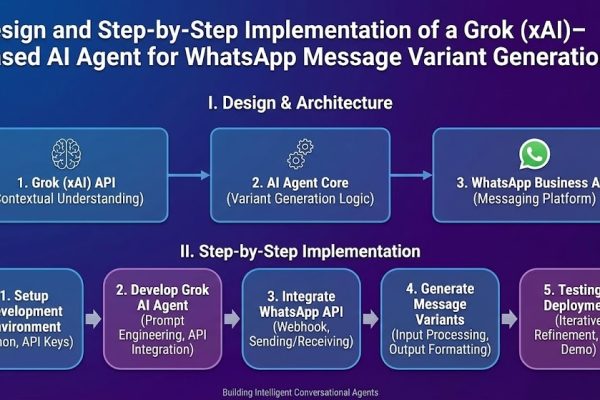

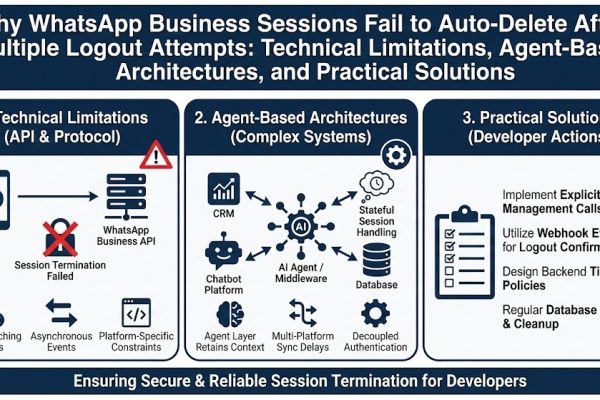

Why WhatsApp Business Sessions Fail to Auto-Delete After Multiple Logout Attempts: Technical Limitations, Agent-Based Architectures, and Practical Solutions for Developers

This article explains why WhatsApp Business sessions stop auto-unlinking after multiple deletion attempts, even when using libraries like Baileys. It provides a clear comparison between WhatsApp and WhatsApp Business behavior, explains backend limitations, and presents production-grade solutions for students and developers building WhatsApp automation systems.